Since I metaphorically live in the Bay Area, I know a lot of people who think the AI singularity is imminent – probably to the huge detriment of the human race. I do not think this1. I find myself making my polite agree to disagree face when someone thinks the world is about to end in five years. Yet I do believe, sincerely, that civilization will end in the next century or two. Which in the eyes of normal Americans and American-adjacents makes me just as much of a crackpot. So I am writing this post to explain my views.

Last year I asked ChatGPT: “Is there a quick handle for the concept where the barrier to entry for lethal technologies is going down, so humanity is generically in more danger, agnostic of which specific existential risk is going to destroy high tech civilization?”

It answered: “Yes, that’s often referred to as the “democratization of destruction.” This term encapsulates the idea that as technology advances, the ability to create and deploy lethal technologies becomes more accessible to individuals and smaller groups, thus increasing the overall risk to humanity.”

I googled the term, and there was only one sensible article about it. It was written in 2014, and the author lists the fragility of the world order when it is increasingly easy for small actors to deploy

- precision guided munitions

- cyber attacks on the power grid

- nuclear weapons, of course

- biotech like designer pathogens

- attacks on the “effectively undefended” “undersea economic infrastructure … which provides a substantial portion of the world’s oil and natural gas, while also hosting a web of cables connecting the global fiber-optic grid. The value of the capital assets on the U.S. continental shelves alone runs into the trillions of dollars”

- robotics

…such advances will rapidly increase the potential destructive power of small groups, a phenomenon that might be characterized as the “democratization of destruction.”

It’s too bad that this term does not, in fact, seem to be popular. It’s a nice verbal handle for a concept that I think deserves to be crystallized more. When I read about all the vectors of existential risk people worry about, I thought: It doesn’t matter which of these gets us, but something is going to get us, because the world is large and the barrier to entry for deploying any one of these is going down. It’s just a numbers game from here.

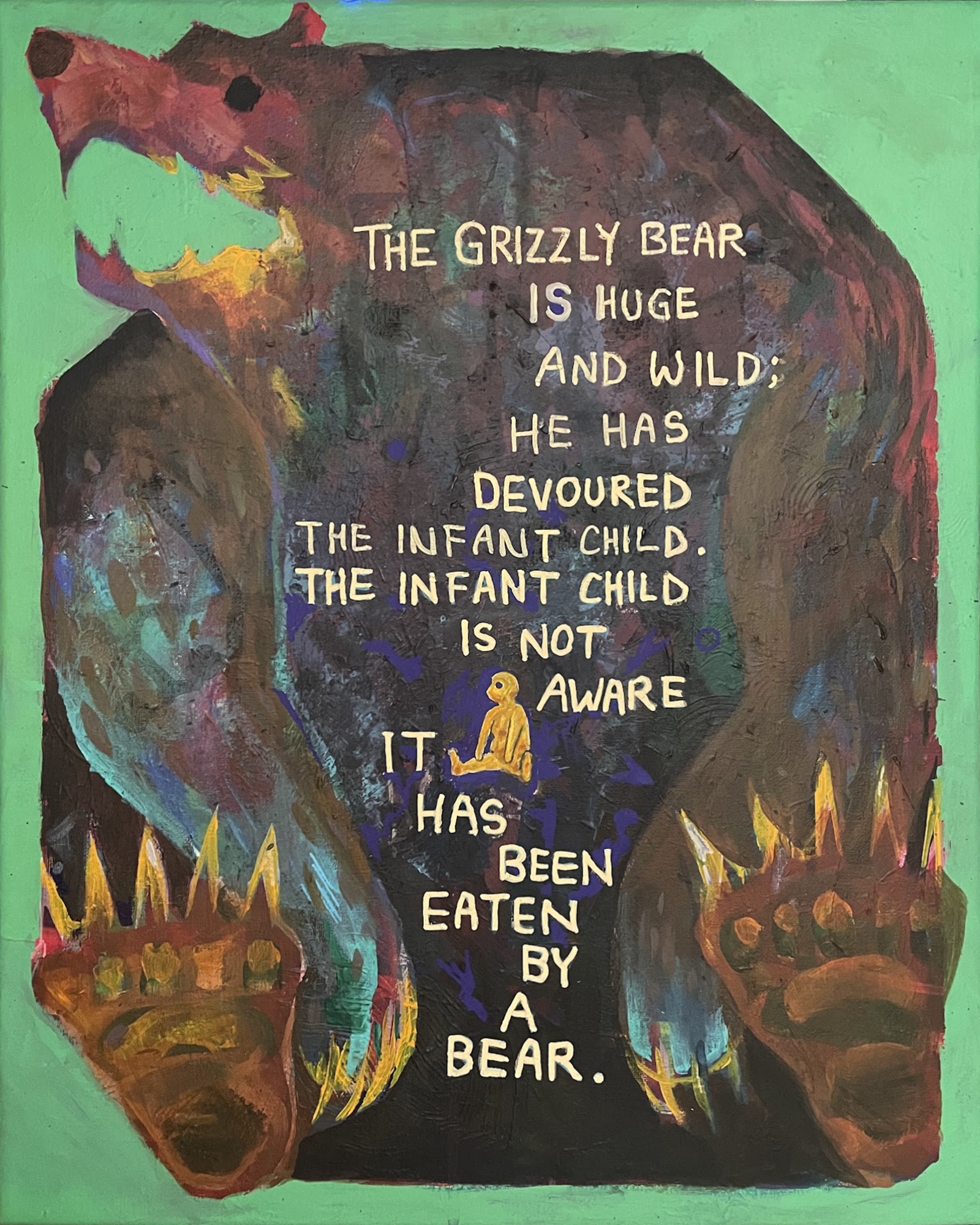

I made this painting around a short poem by Housman. I’m not sure what he meant by it, but when I read it I thought, immediately: ah, it’s us, eight billion people with their variegated hopes and fears and insanities, living with all these technologies… we are already inside the bear.

The democratization of destruction might be one of the significant answers to the Fermi Paradox. An alien species with advanced technology likely has an open forum of ideas and a large, diverse population. The larger the population and the freer the exchange of ideas, the faster the development – and the likelier they can blow themselves up before they get anywhere.

(On the other end of the funnel, my pet answer is that eukaryotic evolution is really hard – bacteria and archaea seem to only have done it once since life arose at all.)

Unlike my friends who feel strongly about AI safety, I do not feel an urgency about ‘doing something about this’ because my views are not tied to any single technology or agent as the threat that will eventually take us out of the current high of prosperity – of global trade, of global infrastructure, of complex manufacturing. So, my crackpot views don’t really show up in the normal way I conduct life. But it does permeate my awareness and appreciation of modern civilization.

I really, truly believe that I am living in a golden age. Food, healthcare, philosophy, knowledge, mattress quality, transportation, grocery produce, brain imaging, entertainment – it’s not going to get better than this. Well, I mean, it’s getting better every day right now! But not for long. Decades, probably; centuries plural, probably not. There will be some huge setback. Someone will blow up… someone will release a… then supply chains will… and people who collectively know how to make xyz will get laid off and never come together again to make the thing work… servers containing the only copy of instructions on how to do something crucial will die…

I sometimes think about how wonderful it would be to be able to time travel to Rome at its peak, integrate there, and sightsee for years. I would wake up every day and think, holy shit, I’m in Rome at its peak. I’d know the end was not far, and be sad for it, and I’d throw myself into the glory of what was there.

Well: holy shit. I’m in the Anthropocene at its peak.

Footnotes

-

My main cruxes are: (1) I think the difficulty of self-improvement will grow faster than a bootstrapping agent’s capacities, as I suspect the solution space will grow beyond the agent’s capacity to search that space for the next capabilities improvement, (2) I don’t think it’s logically plausible that AI produced by e.g. SGD develops a subgoal that it starts prioritizing above its actual reward function – yet many takeoff scenarios seem to assume this. However, for status/anxiety reasons, I wouldn’t want to get into the weeds of this debate unless I poured another 30 hours or so into reading and thinking about this, which I don’t want to do. So, please do not email me to debate AI timelines. ↩