This is a big picture book about how intelligence is implemented, synthesizing AI breakthroughs and preexisting research in neuroscience.

I found this especially pleasurable to read in between watching my 5 month old daughter, who is coming along as a mind. You can read my filtered summary of the book below, but you could also buy it and immerse yourself in the possible answers to one of the most interesting questions ever: “How is intelligence implemented?“. It was as pleasurable as spending a weekend with a very articulate and interesting friend.

The author says he’s a random dude who happened to get interested in the question and put together those pieces, not someone who broke ground himself. (Although he published some research papers in the process of satisfying his curiosity.) He organizes the trajectory of intelligence from the first multicellular animals to current humans in five breakthroughs:

- Steering

- Reinforcing

- Simulating

- Mentalizing (≅theory of mind)

- Language

They are all very interesting, but since the last two are well-covered, my notes focus on the first three.

Steering, 600 million years ago: corals vs nematodes

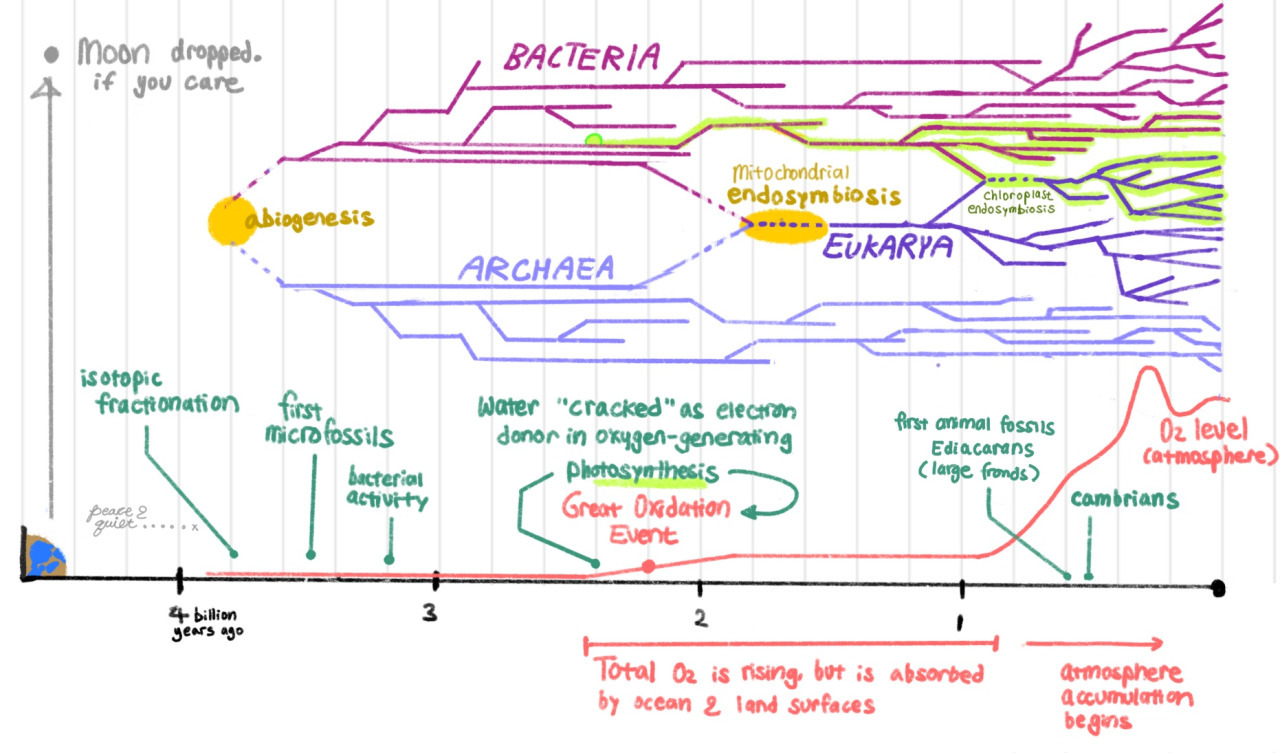

2.4 billion years ago, a bacterium cracks photosynthesis, setting off the Great Oxygenation Event, etc. Here’s a related timeline from another notes post:

Life is mostly doing anaerobic respiration until this point, when some guys start making their own sugars and storing them. These guys are tasty. Previously bacteria were too un-nutritious to eat, but photosynthetic organisms are now worth hunting and eating. This kicks off aerobic respiration, which is more efficient.

Life is mostly doing anaerobic respiration until this point, when some guys start making their own sugars and storing them. These guys are tasty. Previously bacteria were too un-nutritious to eat, but photosynthetic organisms are now worth hunting and eating. This kicks off aerobic respiration, which is more efficient.

Animals and fungi, the two multicellular respiratory (in this context, as opposed to photosynthetic) branches of life, chose different paths: fungi wait for sugar-containing life to die and eat through external digestion by secreting enzymes. Animals have a weird pocket for this.

The pocket comes with some implications.

Generally, things don’t want to be in your pocket. They are, in fact, on the lookout for stuff that seems correlated with ending up in a pocket. So the moment they’re near enough to nab, you – the pocket haver – want a speed and specificity in your reaction that plants and fungi reacting to the angle of the sun don’t have. You want muscles that can contract quickly, and neurons that set off the muscular contraction to pull prey into your mouth. Neurons of both the inhibitory and excitatory variety, that can implement logic like “when you feel something brush you here, relax these muscles, contract others, and open your mouth”.

If you are a radial animal, like a jellyfish or a coral polyp, you just have the pocket, the muscles, and the neurons. If you have a bilateral body plan, your pocket is actually a tube.

You may have noticed in the animal world that the bilateral body plan is much more dominant. It’s because that body plan is good for steering. Radially symmetric animals are not good steerers/hunters – they’d need to be able to sense and move in all different directions. But even a simple bilateral tube with sensors at the front can navigate the world, finding food and escaping predators. Our cars and roombas have a bilaterally symmetric frame with sensors at the “head” for the same reason.

Only bilaterians have brains, which strongly suggests brains came to be for steering. Let’s look at the nematode, which we guess is similar to the first brain-having animals during the Ediacaran, 600 million years ago.

Nematodes use smell to find food quite quickly, and they only need two rules:

- if food smell increases, keep going forward

- if food smells decrease, turn

(Nematodes also have these two rules about light, cold and heat, and sharp surfaces.)

This is, also, the logic Roombas use. You can get a long way as a living thing with these two rules.

Why does a nematode need a brain, but not a coral? There is, of course, the need to coordinate a bunch of muscles. But maybe you could do that without a brain. But you really want a central station for integrating votes on what to do.

Nematodes want to avoid light. But they like food. So they want to add up these and other factors and see what wins.

But wait! They can do even better than that!

If a nematode is well fed, it steers away from CO2; if it’s hungry, it steers towards it. CO2 is released by both food and predators, so if it’s full, pursuing CO2 for food isn’t worth the risk. Animal cells release different sets of chemicals if they’re hungry vs full, diffusing throughout the body and providing a global signal. It has to keep track of its internal state, which can change the valence of stimuli.

So, to steer, we need a bilateral body plan, the ability to categorize stimuli as having positive or negative valence, a brain where various conflicting valence votes can be added up to produce one steering decision, and the ability to modulate the valence based on internal state.

Emotion

It turns out that, as we steer, we get the foundations of emotion for free.

The two features of emotion that are universal across the animal kingdom are valence and arousal. Here’s what it looks like for a steering creature:

- High arousal positive valence: slow swimming, frequent turns – exploit

- High arousal negative valence: fast swimming, infrequent turns – escape

- Low arousal positive valence: immobile – satiation

- Low arousal negative valence: “depressed”

Your place across this 2D emotional space is called affect. A nematode’s affect answers the questions, “Do I want to stay in this location or leave it?” and “Do I want to expend energy moving or not?”

An interesting thing about affect is that it persists long after the stimulus is gone. That persistence is necessary for steering to work, because food or predator smells don’t form a perfect gradient. Persistent affective states overcome the failure mode where you stop moving once a transient water current obscures the good or bad thing that got you moving in the first place.

Even Roombas do this – if it encounters a patch of dirt, it cleans the local area instead of moving randomly, even after the dirt is no longer detected. Dirt is predictive of other dirt nearby.

The first three affect states are intuitive: finding a good thing, escaping predators, and chilling out while it digests. But why does a nematode need to be depressed? It already has a “not moving a lot” state. Transitioning from acute stress (high arousal negative valence) to chronic stress (low arousal) is a hack for saving energy when the stimulus is not escapable. A nematode stops trying to escape a negative stimulus like toxic chemicals ~30 minutes in.

Dopamine and serotonin

Affective states are generated with neuromodulators, most famously dopamine and serotonin. In nematodes, these map on really well onto the affective space. Dopamine is released when food is detected nearby, serotonin is released when the food is detected inside the worm. They are, respectively, the “something good is nearby” chemical and the “something good is actually happening” chemical.

It turns out this is still a reasonable abstraction for non-nematodes. This was not an update for me about dopamine; it was an update about serotonin, which I felt epistemic helplessness about.

Serotonin is released by the consumption of rewards and triggers low arousal, inhibiting further pursuit of rewards. If you raise rats’ serotonin levels, they stop eating and become more willing to delay gratification. (More on delaying gratification when we get to mammals.) Serotonin decreases both liking and disliking facial expressions in rats who are eating foods they normally like or dislike.

this is also what we would expect given the evolutionary origin of serotonin: serotonin is the satiation, things-are-okay-now, satisfaction chemical

And when a nematode is under chronic stress – when the negative stimulus has persisted long enough that it switches over to low-arousal enduring – it gets serotonin back again on top of the stress hormones like adrenaline, to turn off valence and reduce arousal. “Stressed but valence is muted” sounds a lot like numbness or depression.

(This addressed a bit of my confusion about why serotonin and depression seem to have such a complicated relationship – I’m sure there’s much more going on, but this alone somewhat explains why serotonin fixes depression sometimes but not other times.)

Increased dopamine, on the other hand, makes rats impulsively exploit any nearby reward, including eating a lot of food they don’t want. They actually make the don’t-like-this face even more. If you destroy their dopamine neurons, they’ll starve to death next to food, making no attempt to eat – but they’ll make happy faces when you put food in their mouths. Humans who have been given levers to activate their own dopamine neurons will press them like crazy, and report afterwards that the sensation was like building up to orgasm without ever getting there.

Association

In addition to affect, another thing steering gives you is association.

When Pavlov discovered that dogs salivate before they taste food, he was actually studying digestion, and was trying to remove these confounds so that he could measure how much they salivated in response to various foods. This was devilishly hard – they would form involuntary associations with fricking anything previously associated with food.

Nematodes too develop these conditional reflexes. They learn to associate new things with food, predators, or toxicity – steering towards a previously neutral smell once it associates that smell with food, etc. But most non-bilaterians don’t. It seems that when valence arose, so did the ability to change valence. If you can steer, you’re under evolutionary pressure to adjust steering decisions based on experience.

If you don’t steer, there’s not much point learning about correlations in your environment.

So that’s the very beginning of learning.

Reinforcing, 530 million years ago: nematodes vs fish

At some point our nematode-like ancestor splits into the first vertebrate – a kind of fish – and non-vertebrates.

Fish can do trial and error learning to learn an arbitrary sequence of actions (e.g. to find food in a maze). They take random actions, and refine the actions that result in a reward. What is the fish brain doing that the nematode brain cannot?

It turns out fish brains made the same breakthrough AI did in 1994 when it learned to play backgammon. This breakthrough was algorithmic/architectural, not about compute/data/parameters. It was called temporal difference learning, and it solved the credit assignment problem more elegantly than before.

The credit assignment problem is this: If something good or bad happens, how do you know which of your environment cues to associate with that thing?

The nematode uses pretty simple hacks. It only learns that a previously irrelevant stimulus X is associated with a relevant stimulus Y if:

- X happens within a second of Y

- X is a strong stimulus

- X is not familiar

- some other predictive cue is not already associated with Y

But fish brains and the first good backgammon-playing AI did something different.

If you’re training an AI to play backgammon, the reward – win or loss – happens at the very end. You cannot, as nematodes do, only reward the action taken in the final second. You cannot, for computational reasons, reward every single move you took to get there. So what do you reward?

The trick is to have an actor and critic subsystems. The critic predicts the likelihood of winning at any given moment, and the actor chooses what action to take. The thing that is rewarded is the delta between the critic’s current P(win) and its past P(win). (Hence the ‘temporal difference’ of TD learning.) If an AI’s actor subsystem makes a good move that puts the board in (what the critic recognizes as) a much more promising state, it rewards that one most recent move.

This sounds circular – when you start out training this AI, the actor makes random moves and the critic doesn’t know anything about what a good board state is – but simultaneous training works. When this was applied to backgammon, the AI – which discovered the best moves on its own by playing against itself – achieved performance far better than the previous best backgammon AI, which was trained to imitate human experts.

(It does have to play against itself; it turns out not to learn if matched against a better opponent from the beginning.)

Why do we think fish brains do TD learning with an actor/critic subsystem?

First, we found the actor. It’s the basal ganglia. Unlike many other parts of the brain, the basal ganglia – which is structurally very similar across vertebrates – is really clear cut. It takes input from the rest of the brain about the animal’s action and environment. The information gets transformed until it hits the BG’s output nucleus, which contains inhibitory neurons that send connections to the motor centers.

The BG is inhibiting most actions most of the time – it’s only when specific neurons in the BG turn off that some motor circuit in the brainstem get ungated. It’s choosing a single action to take next.

Second, because we’ve found that vertebrates repurposed dopamine slightly to correspond to a temporal difference in reward! If you look at dopamine responses in monkeys who are given cues to expect sugar water, you’ll see that it does not correspond to pleasure, or the sugar water itself, or surprise. What it clearly does respond to is a positive reward temporal difference – dopamine gets released the moment they predict a higher future reward than they did a moment ago. It is sensitive to reward time (do I expect sugar water in 5 seconds or 20?), reward probability, and reward intensity.

Reinforcement and reward must be decoupled for reinforcement learning to work, and dopamine became a signal for reinforcement. For nematodes, dopamine just signals ‘good things nearby’. For fish, dopamine was elaborated to communicate a TD learning signal.

Disappointment, relief, and time

Nematodes don’t experience surprise, since they don’t predict future rewards. Fish do predict, and therefore can experience disappointment (omission of expected positive stimulus) or relief (omission of negative stimulus).

Fish can be trained by the omission of good or bad things; nematodes, who associate but do not predict, cannot.

Another consequence of TD learning is that vertebrates have a time sense. They need it, to predict. If you see a cue after which a good or bad thing happens… how soon after? It’s nice to measure.

Pattern recognition

Another thing fish brains can do that nematodes can’t is pattern recognition.

Pattern recognition includes smell recognition, which is actually a pretty complex feat. A smell isn’t caused by an olfactory neuron going off, but by a specific subset of olfactory neurons.

Recognizing a plate is too hot is a matter of individual neurons firing, but faces, sounds, and smells require pattern recognition. There are two computational challenges:

Discrimination – how to differentiate predators, food, and mates when they trigger overlapping olfactory neurons? Obviously, you have a large dimensionality expansion where a large neural net layer takes input from a small sensory layer.

Generalization – how to recognize a slightly different thing as part of the same phenomenon? Use Hebbian learning (fire together, wire together), so that an incomplete pattern will activate the full pattern in the cortex. This is called auto-association.

Okay, Hebbian learning works fine for generalization in smells, but generalization in vision (or audio) is a whole other thing. The variance in inputs is much larger. Fish can recognize a frog from a different angle as the same frog (although the book does not say if the fish get it right in one shot).

Auto-association can’t solve this. It could never recognize a novel object from a different angle. We don’t actually know how vertebrate brains do this. Convolutional neural networks sort of can do this, and the author thinks the key is that CNNs start off with the assumption of translational invariance – that a given object in a different location of an image is the same object. Hardcoding this assumption in makes them better.

Such an assumption given to a learning algorithm is called an inductive bias – it makes it lean towards learning one pattern rather than another when multiple explanations are possible.

The Cambrian Explosion

Zooming out a bit –

There was an explosion of sensory organ development – and diversity and capability in general – in vertebrates during the Cambrian.

The development of pattern recognition raised the value of sensory organs and vice versa, so they coevolved very quickly! And both of those had a ton of synergy with reinforcement learning (learning arbitrary actions). So in the Cambrian you have a kind of runaway selection, where two (or more) things suddenly make the other thing much more useful. All that change happened very fast.

Curiosity

How do you get a system undergoing reinforcement learning to explore, not just exploit? We used to just make AIs who were learning take random actions some fraction of the time, but it’s better to make surprise itself reinforcing.

In fish and other vertebrates, surprise itself triggers dopamine release. So: curiosity is a property of animals that can undergo reinforcement learning. Nematodes are not curious; fish are.

Spatial maps

Final note on fish vs nematodes: some neurons (“place cells”) in fish hippocampi activate only when fish are at a specific location in space. Fish can navigate to the same place (for example, to get food) from a variable location. Nematodes don’t do this, ants don’t do this. It’s mostly a vertebrate thing.

This is the spatial map, the first model of the world!! With which an animal can distinguish “something swimming towards me” and “me swimming towards something”.

Simulating, 200 million years ago: cynodonts vs fish

What the heck is a cynodont?

300 million years ago, amniotes – a kind of lizard that laid eggs – colonized land. They weren’t the first – insects were already there – but they were very successful. They diversified into reptiles and warm-blooded therapsids.

Being warm-blooded was energy-consuming, but meant therapsids could hunt at night, when reptiles were being all sleepy and edible. They became the most successful lineage.

250 million years ago, a bunch of volcanos went off and the world became inhospitable. This was hardest on warm-blooded animals, who mostly died off. The rule of reptiles began. The main surviving therapsid lineage was our ancestor the cynodont, a burrowing creature. It became small – 4 inches. It was the ancestor of all mammals.

Take a look at this little guy. It’s about to develop a neocortex – a new part of the brain, not just an upgrade of a preexisting brain part.

The neocortical column

The neocortex is weird. The visual cortex processes visual input. There’s an auditory cortex for sound. There are parts for movement, music, language. People used to be pretty confused about what, if anything, the neocortex as a whole did.

But we can simplify the question, because the neocortex is made up of a repeating and duplicated microcircuit – the neocortical column. It’s a six-layer column of neurons that are densely connected to each other, but not very connected to neurons in other columns. These columns look identical across the neocortex even though they do very different things: there are columns that only listen to one rat whisker, and columns that are selective for specific frequencies of sound. There’s always a specific type of neuron in layer 5 that always projects to areas X and Y; in layer four there are neurons that always get input directly from Z, etc. It’s prewired to perform some specific computation.

So instead of asking what the neocortex does, we can ask: what is that circuit doing?

That’s easier to answer. It simulates whatever input it’s hooked up to.

The way generative AIs do.

Generative AIs

There’s a strong relationship between recognition and generation. The first AI that successfully categorized handwritten numbers – without, mind you, ever being told that there were ten of them or what they were supposed to look like – did so by toggling between “getting a real image of a 7 and reconstructing it” and “generating an image of a 7 that it could recognize as a 7”. It kept flowing back between these two ends, recognizing and creating, syncing them up.

AIs have changed a lot since then, but (many? most?) generative models still learn to recognize by generating its own data, and then comparing the generated data to the actual data.

We may call this process imagination.

We’re pretty sure the neocortical circuit implements a generative model – the same neurons fire if you really see a balloon vs imagine seeing one. Perception and imagination are both inseparable in generative AIs and the neocortex. Only mammals and birds – who seem to be able to imagine – have REM sleep (and hallucinations if deprived of sleep), which is the generative process not constrained to actual sensory input.

You probably know about inference – the thing where you can see a familiar shape in an image that only vaguely suggests it, and can’t unsee it. If there’s some other shape that image suggests, you can’t see both at the same time, because you’re perceiving your brain’s simulation that is inferred from the sense data. And it can only simulate one thing at a time.

And in fact, an AI (or a person) can’t simulate and recognize at the same time, since they use the same circuitry! People who are imagining block out much of their sense data. People who are paying attention to the world around them have a hard time imagining.

One way to think about the generative model in the neocortex is that it renders a simulation of your environment so that it can predict things before they happen. The neocortex is continuously comparing the actual sensory data with the data predicted by its simulation. This is how you can immediately identify anything surprising that occurs in your surroundings, because you’re noticing the delta between the simulation and reality.

In the context of AIs, generation (say, of the same face at many different angles) is often seen as a means to achieving recognition. But in animals, recognition is easy for even fish without a neocortex, whereas simulation is only possible with this new hardware.

Because you can recognize without a neocortex, it suggests that simulation was what the neocortex was for.

Vicarious trial and error

What can you do with simulation? The big thing is vicarious trial and error.

When a rat is at a decision point in a maze, turning its head back and forth, you can see the place neurons in rat hippocampi flicker as if the rat were in different locations.

…specific hippocampal neurons encode specific locations. In a fish, these neurons become active only when the fish is physically present at the encoded location—but when Redish and Johnson recorded these neurons in rats, they found something different: when the rat stopped at the decision point and turned its head back and forth, its hippocampus ceased to encode the actual location of the rat and instead went back and forth rapidly playing out the sequence of place codes that made up both possible future paths from the choice point. Redish could literally see the rat imagining future paths.

How groundbreaking this was cannot be overstated—neuroscientists were peering directly into the brain of a rat, and directly observing the rat considering alternative futures. Tolman was right: the head toggling behavior he observed was indeed rats planning their future actions.

Meanwhile, fish have to do actual trial and error. Take a fish that has used a hole to get between two halves of a tank before. Show it food on the other side of a glass barrier. It will dart into the barrier, and give up. Only when it happens to pass into the other half by accident will it get the food. It can learn to do it again through trial and error, but it cannot do vicarious trial and error.

Another example: rats who know a dish contains overly salty food (which it hates and avoids) will dash towards it if they are very salt-deprived. Fish don’t do any equivalent of this. Fish cannot simulate eating normally bad food and finding it much more pleasant than usual. If they haven’t been reinforced to do something weird, they won’t do it. But rats can imagine tasting the overly salty thing and finding it really good this time.

Episodic memory

Episodic memory is just a simulation! We know this because remembering past events and imagining future events use very similar neural circuitry – and because memory is, well, so bad. We hallucinate details.

Why mammals?

Why did simulation not arise before mammals? Speculatively:

- You need to be on land, since you can’t see far underwater. Planning ahead is more useful when you can see a lot of your surroundings.

- You need to be warm-blooded. Neurons fire much more slowly at cold temperatures. The only non-mammals that show an ability to simulate actions are birds, which are also warm-blooded.

The agranular prefrontal cortex

Okay, so now you can simulate. But you could simulate anything. How do you pick what to simulate? How do you know what’s useful? AIs have somewhat solved this problem for constrained problems – AlphaZero chooses its top options and plays them out, but the real world is much more complex. We don’t know exactly how the human brain does it – but we know which part does.

So far, when we talked about simulating, we were talking about the sensory neocortex, in the back of the head. In the front, though, we have the frontal cortex. Of particular interest to us in the frontal cortex are

- the granular prefrontal cortex (gPFC), which evolved much later. Let’s table this guy for now.

- the agranular PFC – the most ancient of frontal regions, which decides what to imagine.

The aPFC is using the same neocortical column the sensory parts are using. So what is it simulating? The primary input into this region is from the hippocampus, hypothalamus, and amygdala, which

suggests that the aPFC treats sequences of places, valence activations, and internal affective states the way the sensory neocortex treats sequences of sensory information. Perhaps, then, the aPFC tries to explain and predict an animal’s own behavior the same way that the sensory neocortex tries to explain and predict the flow of external sensory information?

The aPFC can learn to make predictions on what the animal will do before the basal ganglia triggers behavior. It is simulating the animal itself – and from this model, the aPFC can construct intent.

What is the point of constructing this thing, ‘intent’? It might help them choose what to simulate! The brain can only have a concept of what is relevant to the animal’s goals if it has a concept of goals.

The aPFC is most excited when something goes wrong or something unexpected happens. It can trigger a global pause signal by connecting to the basal ganglia – to explore its top predictions on what the animal will do next. The sensory neocortex and aPFC synchronize (probably rendering a specific simulation). Then whichever reward in the simulation most excited the basal ganglia – which does not know whether the sensory neocortex is simulating the real or imagined world! – gets chosen.

And in fact, it is being reinforced by the rewards in the simulation!

(Self help implication, I suppose: you can train yourself into new behaviors by simulating them and imagining their payoff.)

Agranular

The fourth layer of the neocortical column contains a type of cell called granule cells. The “agranular” in agranular prefrontal cortex means that the aPFC is missing the fourth layer of the neocortical column.

In the sensory cortex, layer 4 is where raw sensory input flows into the neocortical column. But the part of the brain that constructs intentions doesn’t particularly want to update its predictions based on animal’s behavior – it wants the behavior to change. If you’re thirsty and start heading towards somewhere with no water, the aPFC doesn’t want to adjust its intent-construction to assume you aren’t thirsty.

Mentalizing, 50 million years ago: chimpanzee vs rat

Chimps can hide food from each other in quite elaborate ways that show they have models of what others know about the world.

Before we talk about how this works – why? Why did they develop this?

It may be because early primates were frugivores, which is a cognitively demanding ecological niche – you want to pick fruit when it’s ripe but before it falls (and is consumed by everyone else), which is a narrow window. You need to know the timing for each fruit in the area. You ideally need to plan to visit the ones that are coming in.

Fruit gave them an abundance of calories to spend on bigger brains. And it gave them an abundance of time to fuck around and cause problems. Primates spend up to 20% of their day socializing, a really large slice of time.

Primate social power comes not from brute strength but good politicking: making up with guys you had fights with, identifying and allying with lower-ranking apes who have something to offer, understanding the intent of high ranking individuals and what they will do in the future, predicting who might be on top later. You need theory of mind for all this stuff. So what’s going on in the brain?

The granular PFC

Let us look at the granular prefrontal cortex – the much newer frontal cortex bit, which only primates have. In humans, the gPFC becomes uniquely active during tasks that require self-reference: considering your own feelings, evaluating your personality traits, imagining yourself in some scene.

Very approximately, the aPFC from the last section listens to the animal’s internal states to predict the animal’s behavior and produces the mental construct of intent. But where does the gPFC get its input from? The aPFC.

We have gotten very meta.

The aPFC constructs explanations of the amygdala and hippocampus (inventing intent); the gPFC might be constructing explanations of the aPFC (inventing… the mind?).

Suppose you put our ancestral primate in a maze. When it reached a choice point, it turned left. Suppose you could ask its different brain areas why the animal turned left. You would get very different answers at each level of abstraction. Reflexes would say, Because I have an evolutionarily hard-coded rule to turn toward the smell coming from the left. Vertebrate structures would say, Because going left maximizes predicted future reward. Mammalian structures would say, Because left leads to food. But primate structures would say, Because I’m hungry, eating feels good when I am hungry, and to the best of my knowledge, going left leads to food.

The gPFC lights up in monkeys and humans that have to do some task where they must model others’ intent or knowledge; people with thicker gPFC areas have bigger social networks and do better on theory of mind tasks. It’s a region that is used both for understanding yourself and others.

New learning

There’s a cool and non-obvious thing that (this implementation of) theory of mind lets you do: learning new skills through observation.

When a primate watches another primate do something, her own premotor cortex often activates as if she is doing those actions herself. This is the famous mirror neuron thing. The following thing is even more illustrative of the link between theory of mind and learning from observation:

…temporarily inhibiting a human’s premotor cortex impairs their ability to correctly infer the weight of a box when watching a video of someone picking it up (arms that easily pick it up suggest it is light, but arms that struggle at first and have to adjust their position to get more leverage suggest it is heavy), but it has no impact on their ability to infer a ball’s weight by watching a video of it bouncing on its own. This suggests that people mentally simulate themselves picking up a box when seeing someone else pick up a box (“I would turn my arm that way only if the box was heavy”).

You get theory of mind because your brain is able to pretend you are that person – in quite non-abstract, physical ways! And so, when you watch someone do a task you’ve never done before, your brain somewhat acts as if you did it yourself.

Chimps have tool use and they’re quite homogeneous within groups, even though another group across the river might use a different method. Not only does theory of mind (at least, the way it’s implemented in primate brains) let them pick up new skills, modeling what the student does or doesn’t know is necessary for a chimpanzee to actively teach skills to its child.

Planning

Mammals already had planning once they had simulation, but primates took it to another level because theory of mind lets you simulate being your future self. Rats can imagine and plan routes to get water if they are thirsty now. But they do not plan to get their future thirsty self water if their present self is not thirsty.

Language, 0.2 million years ago: human vs chimpanzee

The breakthrough of reinforcing enabled early vertebrates to learn from their own actual actions (trial and error). The breakthrough of simulating enabled early mammals to learn from their own imagined actions (vicarious trial and error). The breakthrough of mentalizing enabled early primates to learn from other people’s actual actions (imitation learning). But the breakthrough of speaking uniquely enabled early humans to learn from other people’s imagined actions.

Unlike the previous breakthroughs, where there was at least one new structure associated with the change, there is no neurological structure found in humans that isn’t in apes. Bigger this and that, but same wiring.

The hardest thing to explain about humans, given that their brains underwent no structural innovation, is language.

(Our plausible range for language is 100-500K years ago. Modern humans exhibit about the same language proficiencies and diverged ~100K years ago, which is also when symbology like cave art show up. Before 500K the larynx and vocal cords weren’t adapted to vocal language.)

Apes can be taught sign language (since they’re physically not able to speak as we do), and there are multiple anecdotes of apes recombining signs to say new things. But they never surpass a young human child. How are we doing that? What’s going on in the brain?

Okay, sure, we’ve heard of Broca’s area and Wernicke’s area. They’re in the middle of the primate mentalizing regions. But chimps have those same areas, wired in the same ways. Plus, children with their entire left hemisphere (where those regions usually live) removed can still learn language fine.

If not a specific region, then what? The human ability to do this probably comes not from a cognitive advancement (although it can’t hurt that our brains are three times bigger than chimps’) but rather tweaks to developmental behavior and instincts.

Here are two things about human children that are not true of chimp children:

At 4 months, they engage in proto-conversation, taking turns with their parents in back-and-forth vocalizations. At 9 months, they start doing “joint attention to objects”: pointing at things and wanting the parent to look at the object, or looking at what their mom is pointing at and interacting with it. (You can see that if language arose as a mother-child activity that improved the child’s tool use, there’s no need to lean on group selection to explain its evolutionary advantage.)

Chimps don’t do either. They do gaze following, yes, but they don’t crave joint attention like human children. And what does a human parent do when they achieve joint attention? They assign labels to the object.

To get a chimp to speak language, it would help to beef up their brain, but this wouldn’t be enough – you’d have to change their instincts to engage in childhood play that is ‘designed’ for language acquisition. The author’s conclusion:

There is no language organ in the human brain, just as there is no flight organ in the bird brain. Asking where language lives in the brain may be as silly as asking where playing baseball or playing guitar lives in the brain. Such complex skills are not localized to a specific area; they emerge from a complex interplay of many areas. What makes these skills possible is not a single region that executes them but a curriculum that forces a complex network of regions to work together to learn them.

So this is why your brain and a chimp brain are practically identical and yet only humans have language. What is unique in the human brain is not in the neocortex; what is unique is hidden and subtle, tucked deep in older structures like the amygdala and brain stem. It is an adjustment to hardwired instincts that makes us take turns, makes children and parents stare back and forth, and that makes us ask questions.

This is also why apes can learn the basics of language. The ape neocortex is eminently capable of it. Apes struggle to become sophisticated at it merely because they don’t have the required instincts to learn it. It is hard to get chimps to engage in joint attention; it is hard to get them to take turns; and they have no instinct to share their thoughts or ask questions. And without these instincts, language is largely out of reach, just as a bird without the instinct to jump would never learn to fly.

As weak indirect evidence that the major difference is about language acquisition instinct, not language capability: Homo floresiensis underwent a decrease in brain and body size in their island environment (until their brains were comparable in size to chimpanzees’), but they kept manufacturing stone tools that may have required language to pass on.

Morality

I’m skipping what the book has to say about language’s consequences on early human evolution, knowledge accumulation, and some other things. Worthy topics, interesting topics, but I’ll engage with just one before ending this review: morality. Here I’ll spin briefly into my own preoccupations and thoughts.

The human species is absurdly, unbelievably altruistic compared to any other on the planet. We are also more pointedly vicious and exterminatory. Animals simply don’t help random other beings in suffering, commit genocide, or harass a single person for over a decade over a conflict or rejection.

That our altruism and our viciousness are both downstream of language has been put forth, convincingly to me, by this book and others. (The Elephant in the Brain, I think, is where I first ran into this.) The ability to gossip enabled humans to coordinate to punish defection and reward altruism. Altruism was selected for because being heroic or generous often lets you climb the social ladder, especially if you’re heroic in a socially savvy way.

For every incremental increase in gossip and punishment of violators, the more altruistic it was optimal to be. For every incremental increase in altruism, the more optimal it was to freely share information with others using language, which would select for more advanced language skills.

This new social landscape also selected for the instinct to identify and punish moral violators. And what counts as a moral violation is complicated, diverse, and sometimes absurdly idiotic.

The author briefly references Bostrom, so it may be that he is concerned about human extinction. I too am concerned about human extinction. I think it’s probably going to happen in the next century (although not from AI).

But as the author also points out, the universe is young. We are ~1% into the era of star formation. That’s a lot of time for someone to become multiplanetary – multigalactic? – life even if we don’t make it. The question is, what kind of people will they be?

When you read a lot about evolutionary biology, you start forming hunches about what aliens who succeed in space colonization will be like. (For example, a medium hunch: sapient children might be difficult by default. If a K-selected species has contraception long term, they will undergo selection for being loving parents.)

It seems hard to believe a spacefaring species could become multiplanetary without language. If they have enough language to build rockets, they have enough language to punish defection (if not reward altruism, although they probably had that too). It seems likely they’ll have notions of defection that horrify me – but they will have something like morality.

If an evolved species ends up taking over the universe, they will likely have many of the qualities I care about: intelligence (enough to have left their planet), curiosity (to solve the explore/exploit problem of reinforcement learning), altruism (selected for in an evolutionary landscape with language), and the ability to consider what things are like from someone else’s point of view.

(These same filters might weakly hold for artificial intelligences too, in the long run?)

Nothing is guaranteed, but it’s a nice thought.